“Humanity is acquiring all the right technology for all the wrong reasons.”

– R. Buckminster Fuller

*Disclaimer: when I use the term AI, I’m not referring to just Strong AI or AGI, but the other “pre-AI” automation or ML models that exist today as well. The “concept of AI” if you will. I’m not an AI researcher or even a competent enthusiast, so don’t take my knowledge of the technology as comprehensive.*

While riding the waves of the future’s existential crises, there’s more than enough to be worried about. There’s likely equally enough to be optimistic about as well, though my brain (despite various training attempts) tends to look for the problems, so that I may identify–or at least be aware of–potential solutions. I spend way too much time online cutting through the brush with my query machete, attempting to find the “true” answers to my debacles. Unfortunately for me, I always come up short due to the whole “you can’t predict the future” thing. I don’t want to predict the future, I would just like more general assurance of some larger-scale concepts.

In the field of artificial intelligence, X-risk (short for existential risk) is defined as a risk that poses astronomically large negative consequences for humanity, such as human extinction or permanent global totalitarianism. There’s a ton of writing on this subject, and I don’t want to go into that here since it’s deeply researched, and many people are working on it, and every company that’s trying to develop artificial general intelligence has departments on safety and risk–arguably the most important part of AI research. I want to talk about how AI is and will continue to affect us creatively — the more specific “micro” creative risks of the future of AI — where the line should be on development and the why behind some of the work in the field towards an artistic AI.

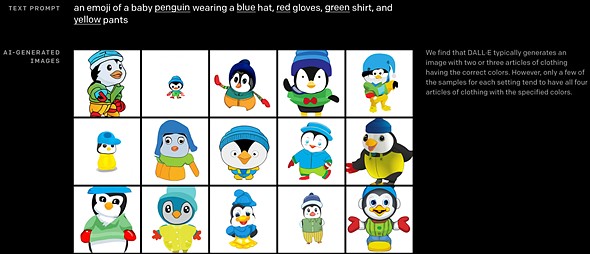

As a layman, it’s been my understanding that the purpose of AI is to alleviate the more tedious, time-consuming drudgery that humans either aren’t good at or dislike doing in their jobs. As someone who spends time on the tech-focused parts of the internet, I’ve seen attempts to create ML models that not only take over the monotonous but the enjoyable as well. A model is an algorithm that automatically improves through experience and the use of data. You teach a model on what’s known as training data so that it can make decisions and predictions without explicitly being programmed to do so. While I understand we haven’t achieved “true AI ”, models like DALL-E, a version of GPT-3 (one of the most cutting edge language models), present some problems that should and are being discussed in the community.

Just because we can doesn’t mean we should

The technology industry at large has a habit of trying to solve everything using technology and tends to focus on the wrong problems. Tech is trying to “solve” aging when they should be focusing on solving premature unnecessary death. Many other things the industry sets out to solve would be better tackled by reworking existing systems, or simple communication. We don’t need a new productivity tool or a social media app, we need understanding, civility, and community. Technology isn’t the problem, it’s greed. The problems we face as a society are complex and systemic, where technology should be used as a seasoning rather than the main ingredient in many of our recipes of progress. Even if we can create true AI, does that mean we should?

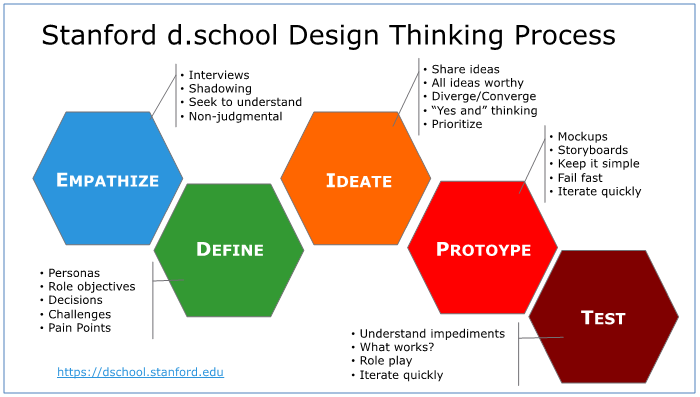

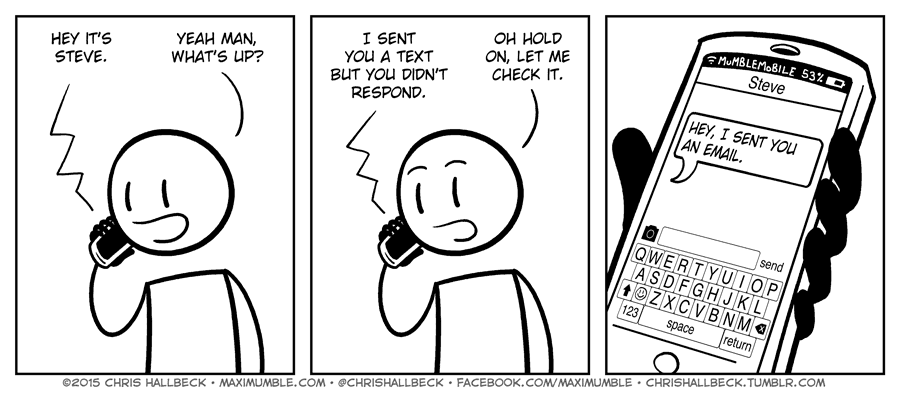

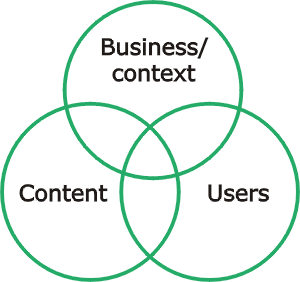

In the design and product industry, good products are built and designed by asking “Why?” If the product has no purpose, or the design doesn’t solve the problems of the intended audience, then why are we building it? We should ask the same of AI. What is the goal of AI? What are we striving for? We have enormous problems to face as a global society, politically, environmentally, etc. AI may be able to help with some of these issues, but not everyone is working on solving these problems. Many showcases of AI online are in a creative capacity. An AI that writes a story, paints a picture or creates a song. At a lower level, it’s a novelty and fun for all and may be useful to commercial artists, at a higher level it becomes a meaning crisis.

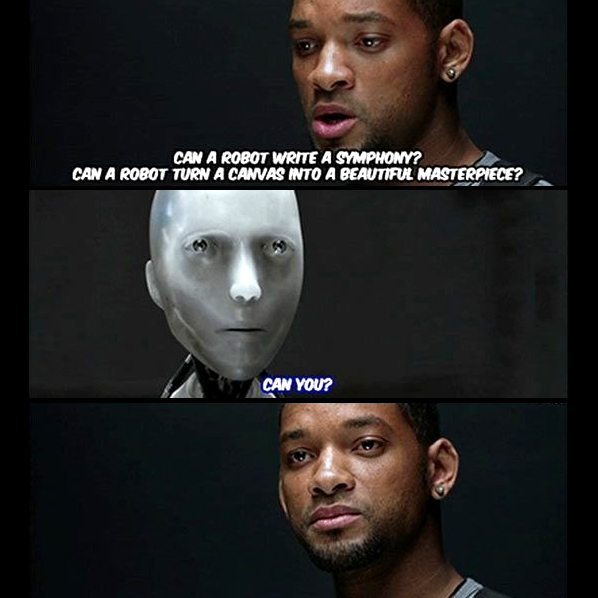

We can’t talk about micro creative X-risks of AI without talking about the macro as well. As the technology quickly gets better, how long before it can write a coherent novel, paint a true work of art, or write the next hit song? Where is the line when working on this type of technology? Most of the reasoning behind this work is to help humans with their creative work, not take it over. A partner rather than a competitor. That would be nice if that’s where it ends. A technology that helps with writer’s block, great. A technology that helps brainstorm ideas, great. But if the technology exists, what’s to stop people from going further and having AI do it all on its own? True, it will raise the bar for creatives. If it’s easier for anyone to create a bad romance novel, then the true romance novelist will have to work harder to make better work. That’s a good thing, but for many consumers will they care?

Many consumers of art – whether it’s visual, written, or auditory, want the human relationship that comes from being the audience. That’s where much of the enjoyment of good art comes from, knowing that someone else in the world created it, and is connecting with you through their art, knowing it’s from a fellow ape. If mindless consumption is the goal then it’s not really related at all, right? Let the floodgates of crap open. If 99% of things are “bad” anyway, what’s the difference if it becomes 99.8%?

The future of art – process over product?

I believe that there will probably be three categories of art in the future – human-made, human + machine-made, and machine-made. What does machine-made art look like? From generative artists of today, it’s surreal and abstract, while the models like Artbreeder and DALL-E create more concrete examples. Maybe in the future, there will be an Etsy just for human-made products if non-artists get the same idea of trying to take the easy road to make money with little effort. I think without question there will be AI-augmented work, even something as simple as Grammarly can constitute as an “augmented experience.” But I’m of the hope that the industries that value the art they create, will focus on human-made and augmented work rather than just machine-made. Machines may make “art” but they cannot make your art (on their own). There will still be a reason to create, to get your music, thoughts, and visuals into the world.

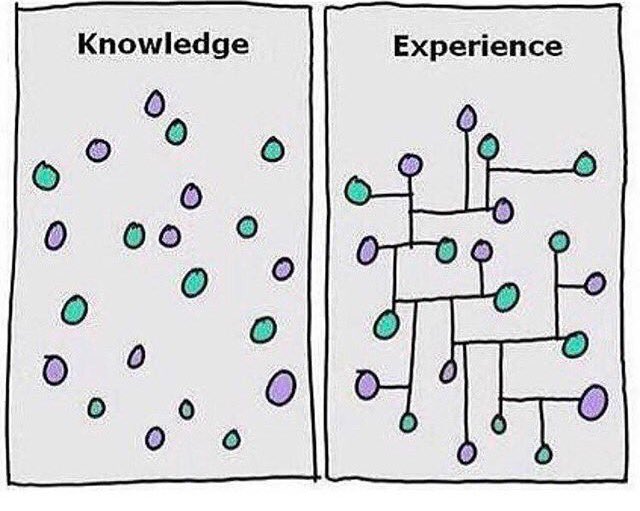

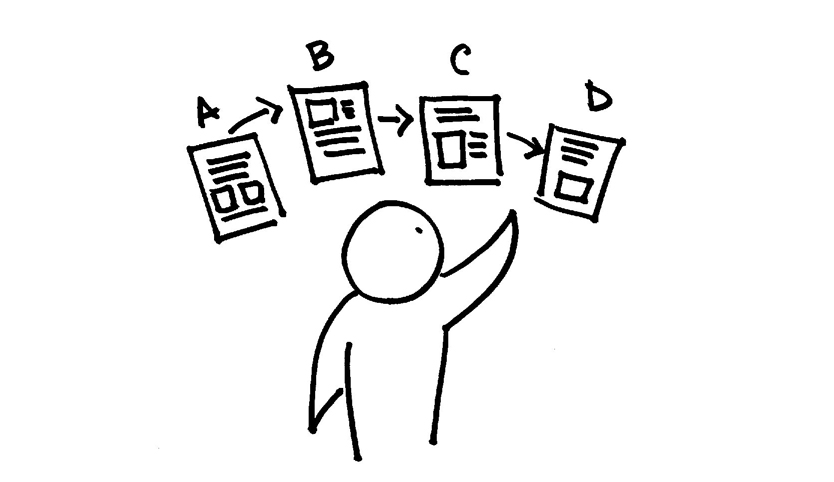

Even though computers are better at Go or Chess, people still play. When it comes to art, the goal isn’t to be the best at something, it’s the act of creation and connection. So does it matter if someone else can paint “better” than you? Will you stop painting because you aren’t the “best”? Maybe AI will just be another factor in that decision, if someone or something is better than you at doing something, why do it? Your art must come from you and your process, art is not just the product you create. If you can push a button and a painting appears, even if it’s technically good, who cares? It’s still not yours. AI is understood to be good at delivering a correct result, rather than an interesting process. As humans, how and why we get from A to B is just as important as what B actually is. We value the process over the product, especially in art, which the AI art of today lacks.

Hold the line

Keep in mind that we don’t need to achieve AI or AGI to induce these fears. Even today’s models like GPT-3, Google’s T5, or what GPT-4 or 5 might be capable of, are still causes for concern in many situations. One such example is this generative AI art model created by an artist called Rivers Have Wings, where the model can attempt to mimic the style of another artist. Other examples include this Twitter account, where users simply name a concept, then the AI creates a digital work based on the suggestion. But when you view completely AI-generated art, it feels empty. To me, it feels uneasy and a bit disturbing, like you’re not supposed to be looking at it. Maybe that’s the inherent attraction some find to these pieces, that there’s something humans aren’t meant to understand. Maybe I’m reading too much into it and it’s simply a novelty. AI artist Mario Klingemann, also known as Quasimondo, believes this is nothing more than “a storm in a teacup.”

“They create instant gratification even if you have no deeper knowledge of how they work and how to control them, they currently attract charlatans and attention seekers who ride on that novelty wave,” Klingemann says.

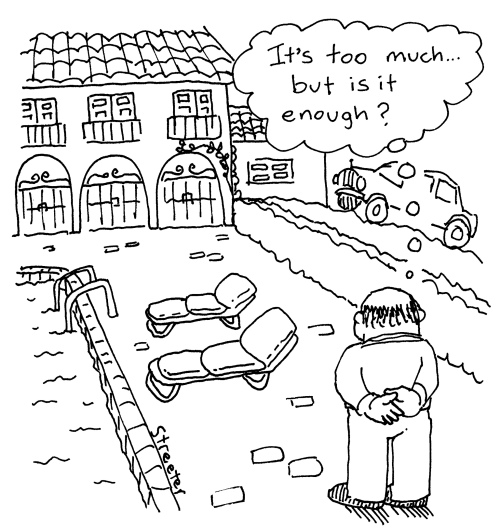

So what’s the right amount of concern to have these days? If you talk to Joe Schmoe off the street, he probably has one of the two concerns -he isn’t worried at all because he has no pulse on the technology, or he thinks AI will take over the world and make us redundant. If you go online to the Twitter or Reddit communities related to AI, you’ll find the same. Many think “it’s overhyped, interesting stuff but don’t worry for a long time” or they think “the singularity is near and we’re on our way out, might as well just give up now.” Some think we will see large disruptions from true AI in our lifetimes, others think it’s a pipe-dream and we may never get past Turing completion.

For example, this Reddit answer to the question: Can art that has been created by Artificial Intelligence be considered as “real art” or not?

“I’d tend to lean towards no. Art could broadly be defined as an expression of the human condition/experience rendered through a specific medium. So I’d say the output of an AI wouldn’t be defined as art. Though defining art definitely is almost impossible so it’s hard to come to a conclusion.”

And another comment about the future of creativity and art which gives a more bleak and depressing scenario:

“I would argue that the end of art is to arrive in our lifetime.

First for specialized narrow AI segments, and eventually all creativity, as nobody will be able to outperform the synthetic creative machines that will draw from more inputs and more feedback knowledge and data than a human brain ever could.

Music, drawing, painting, and creative writing will be outperformed by ML and the consumer will be better understood, predicted, and manipulated than by any human once could.

At some point, humanity will encounter the great stall, where you no longer feel like stepping into the arena and competing. When the machines will out-draw, out-create, and out-sell your wares, you will have to step out of the arena and submit to the loss of your creative self never being able to come close.

There will be a time when the natural artist vs. the hybrid artist will co-exist, but that era will come to close too.”

Sounds great, sign me up.

An article written by Cem Dilmegani, the founder of an AI-centric data company, condenses the results of major surveys of AI researchers showing that when asked, “Will singularity ever happen?” Most AI experts surveyed say, yes. When asked, “When will the singularity happen?” Most answered, “Before the end of the century.” This is concerning, even when we look at the past when experts predicted certain checkpoints in the development of AI will be reached already which haven’t been realized. Again, it seems nobody can predict the future, yet.

I’ve seen many online describe a future “utopia” where we’ve achieved AGI, solved all our problems, and humans are free to do whatever they want all day while we reap the benefits of a UBI. Entertainment is created on the fly and customized for our states of mind. The problem is–this is highly unlikely, skips over us solving global problems such as climate change, and with nothing but free time and no desire to progress–we would truly be redundant. And if anything we can do AI can do better, what’s the point of doing anything then? Inherent meaning, for the sake of it? Is that good enough to keep all of humanity going?

Tell it to me straight

It’s very difficult to get a straight answer on this since what I assume would be the best way to find out what the closest correct level of concern is, is to call a safety researcher at OpenAI or Deepmind and ask. I suspect even amongst that group there are different levels of concern. Many are probably techno-optimists excited for the future and have quenched their thirst from the AI kool-aid.

AI research suffers from being abstract and technical, making it difficult for those outside the field to really know what’s going on or how to feel about it. I don’t want to sound like an anti-tech Luddite, because I do believe the technology being worked on can add a lot of value to our world, and of course, the cat’s out of the bag with AI already, but I want to prevent it from going over the line of making parts of our lives too automatic and making us unnecessary. I work in the technology industry, but I’m a big believer in balance and wish we were working towards technology where it’s needed, not where it’s negatively disruptive. It’s tiring to fight ourselves for the greater good against the problems, situations, and technology we’ve created.

By combining my hopes with reality, I’m still wanting to find the “truth”, but it feels like we won’t know until we know, you know? Life is short, big changes take time, so maybe I’ll find peace in focusing on what’s important and crossing bridges when necessary. Wouldn’t that be nice? It’ll be interesting to see how the next few years play out with the development and application of this technology, though I still believe we should keep some things sacred. If I’m totally off base on these thoughts let me know, I’ll actually be relieved if there’s “not as much to worry about” and I can just move on to my next existential crisis sans AI.

![Sketch of the embodied cognition perspective [11]](https://www.researchgate.net/profile/Pierre_Levy6/publication/295399680/figure/fig2/AS:331556657877000@1456060675426/Sketch-of-the-embodied-cognition-perspective-11.png)